Code at: https://github.com/yashwantherukulla/DDPM-From-Scratch

Linear Noise Scheduler

Forward Process

- Given return

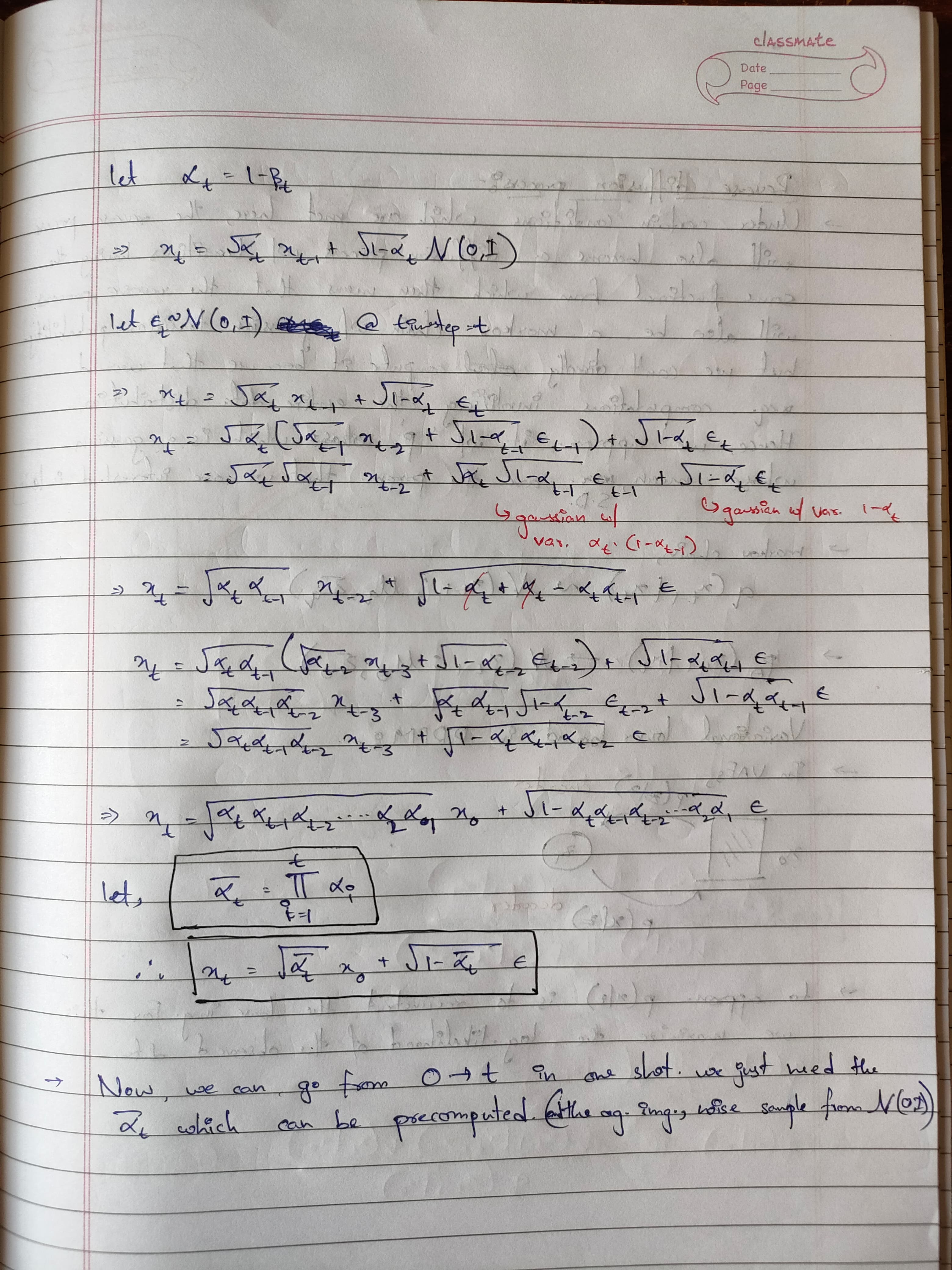

- In order for the entire thing to be optimized, we will pre-compute and store the s and the cummulative product terms in advance.

- Here and

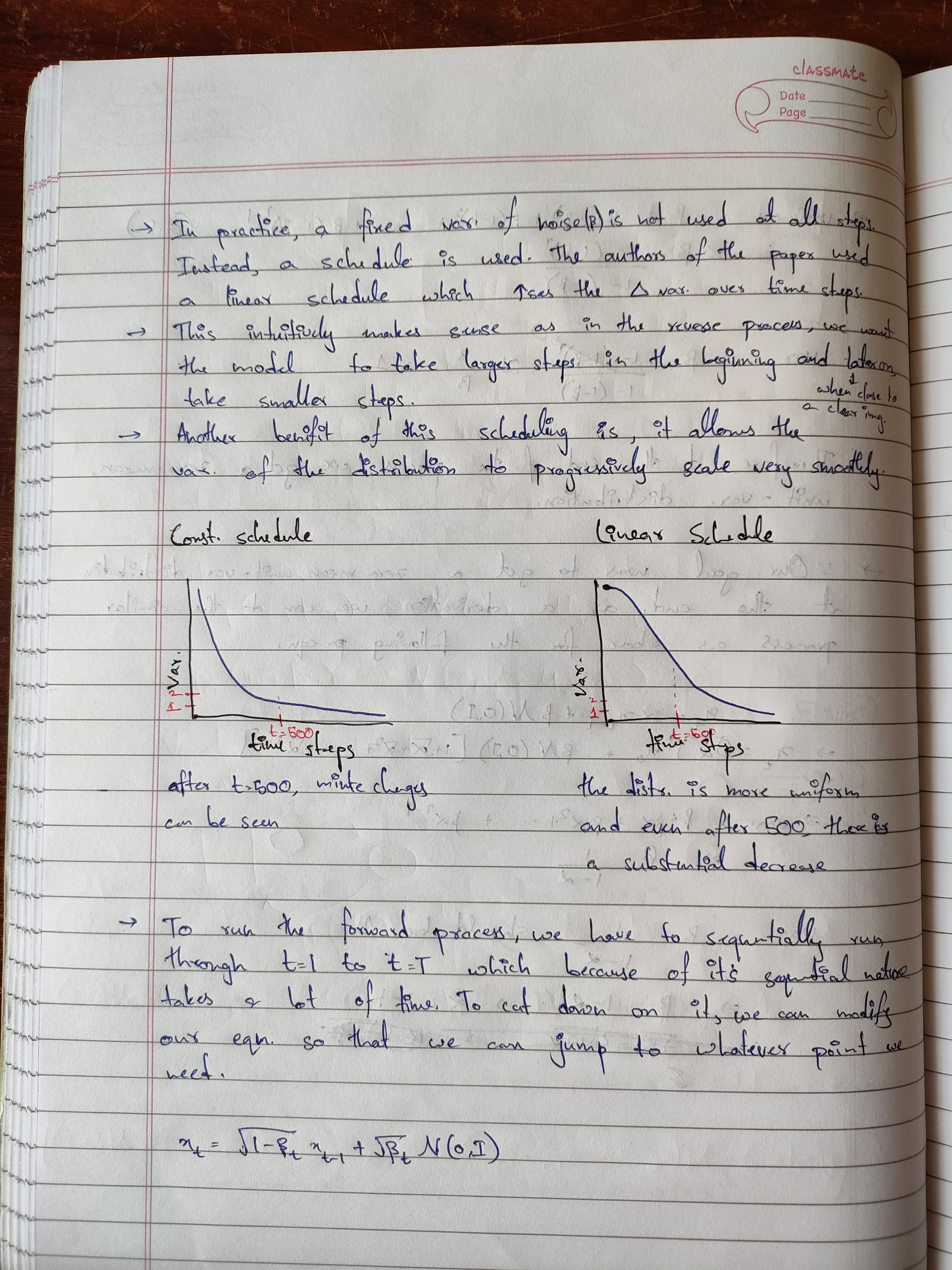

- The authors use a linear noise scheduler where they linearly scale with , and .

Reverse Process

- Given , returns i.e., by sampling the reverse distribution.

- where,

- We will pre-compute and store,

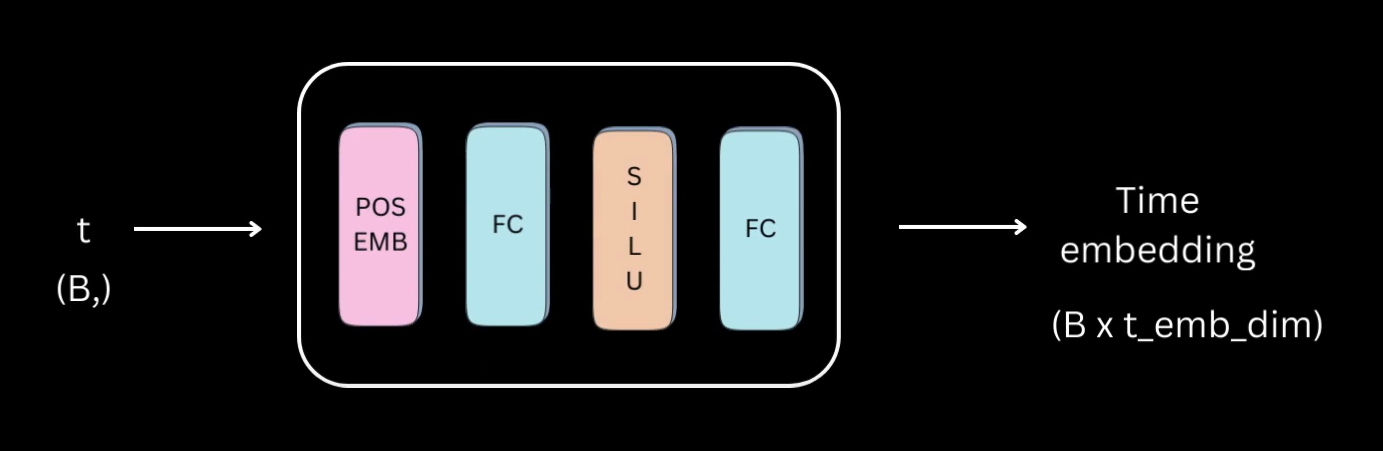

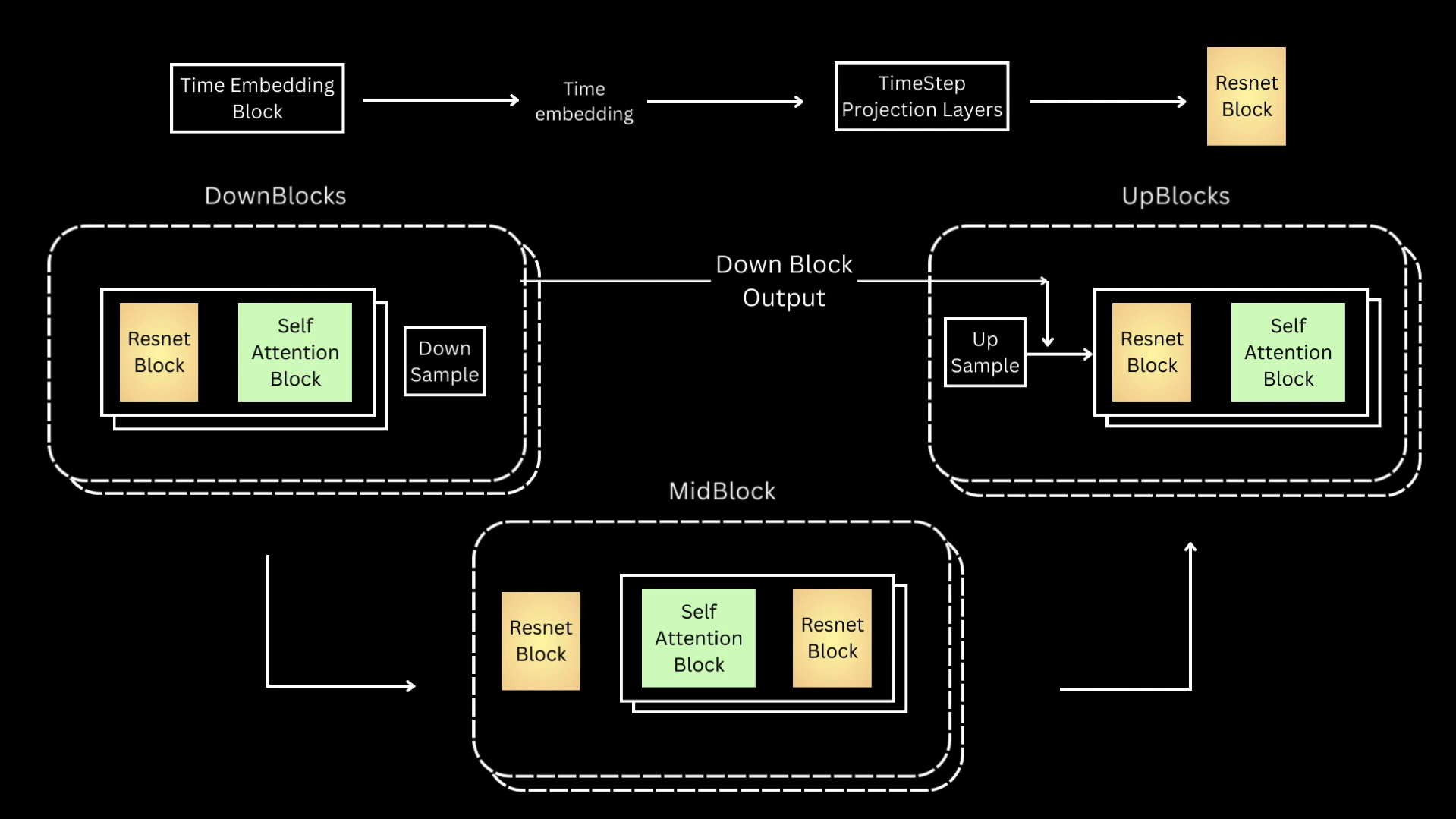

Time Embedding Block

- Given a 1D tensor of shape , this block will output a Time Embedding of shape

- the input will pass through this block as follows,

- Positional Encoding: Sinusoidal Positional encoding used in Transformers

- FC

- SiLU (Sigmoid Linear Units activation)

- FC

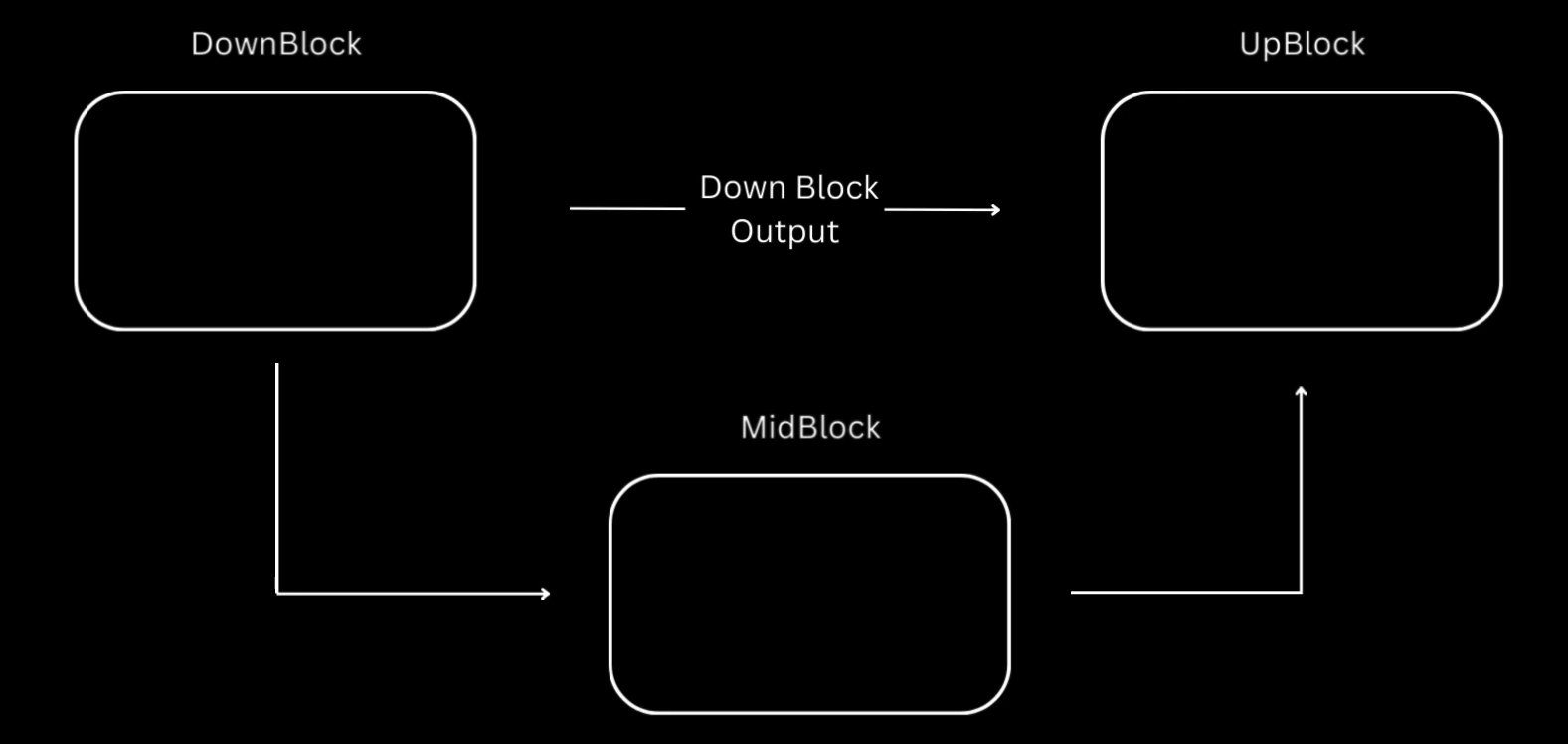

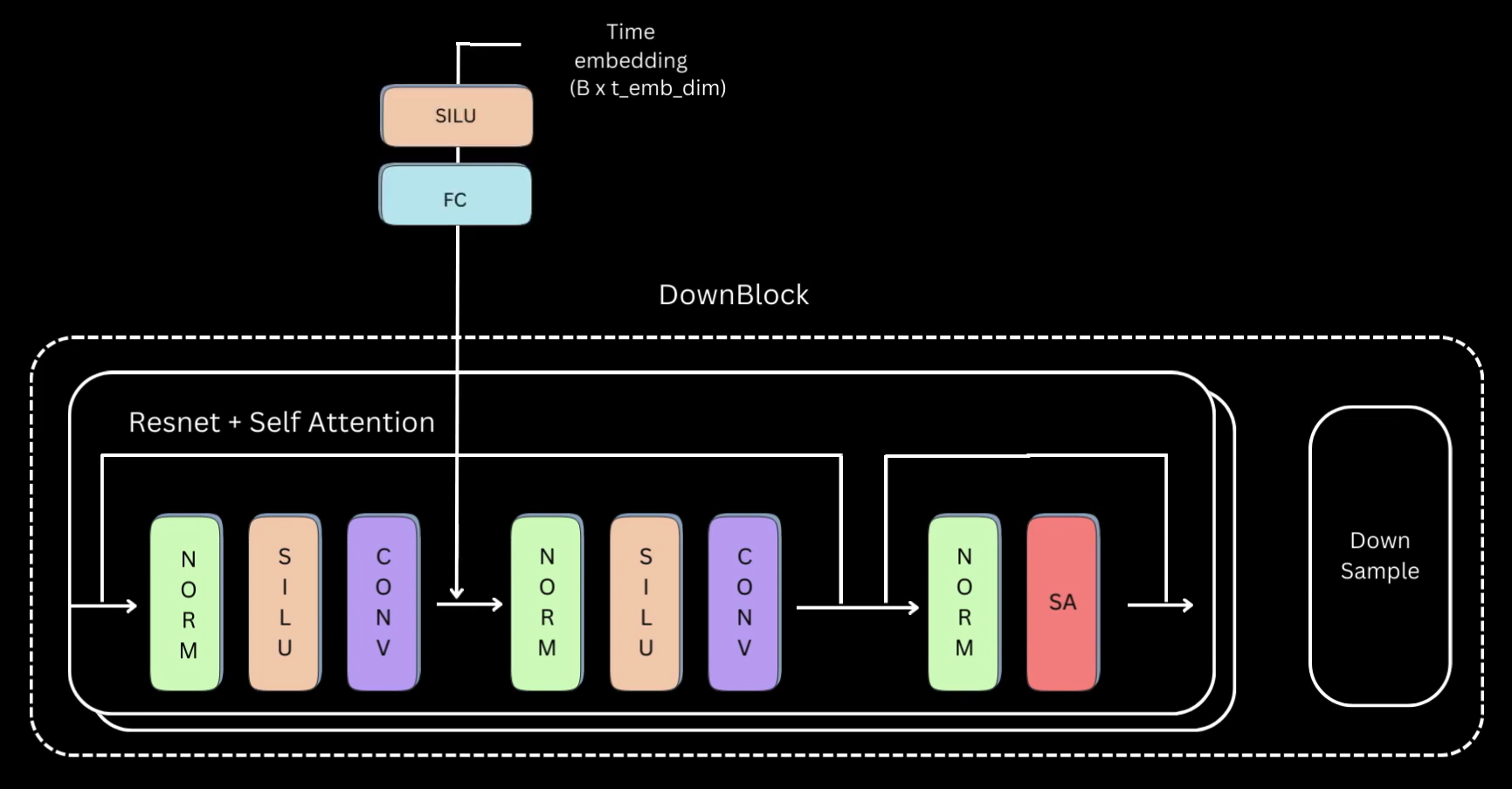

U-Net Model

- Inspired from SD’s U-Net architecture on HuggingFace

- multiple layers of the above image is the down block in the below image.

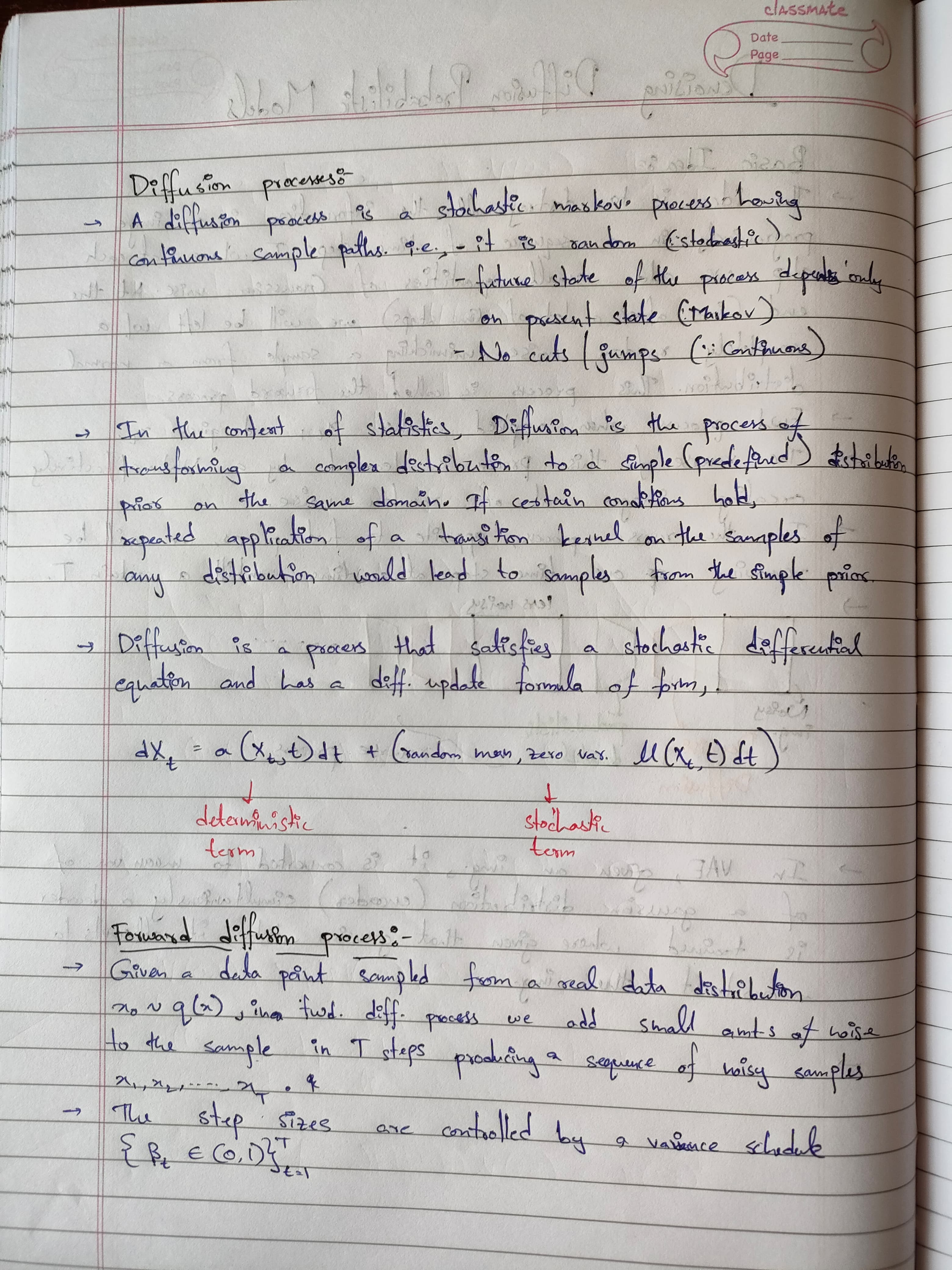

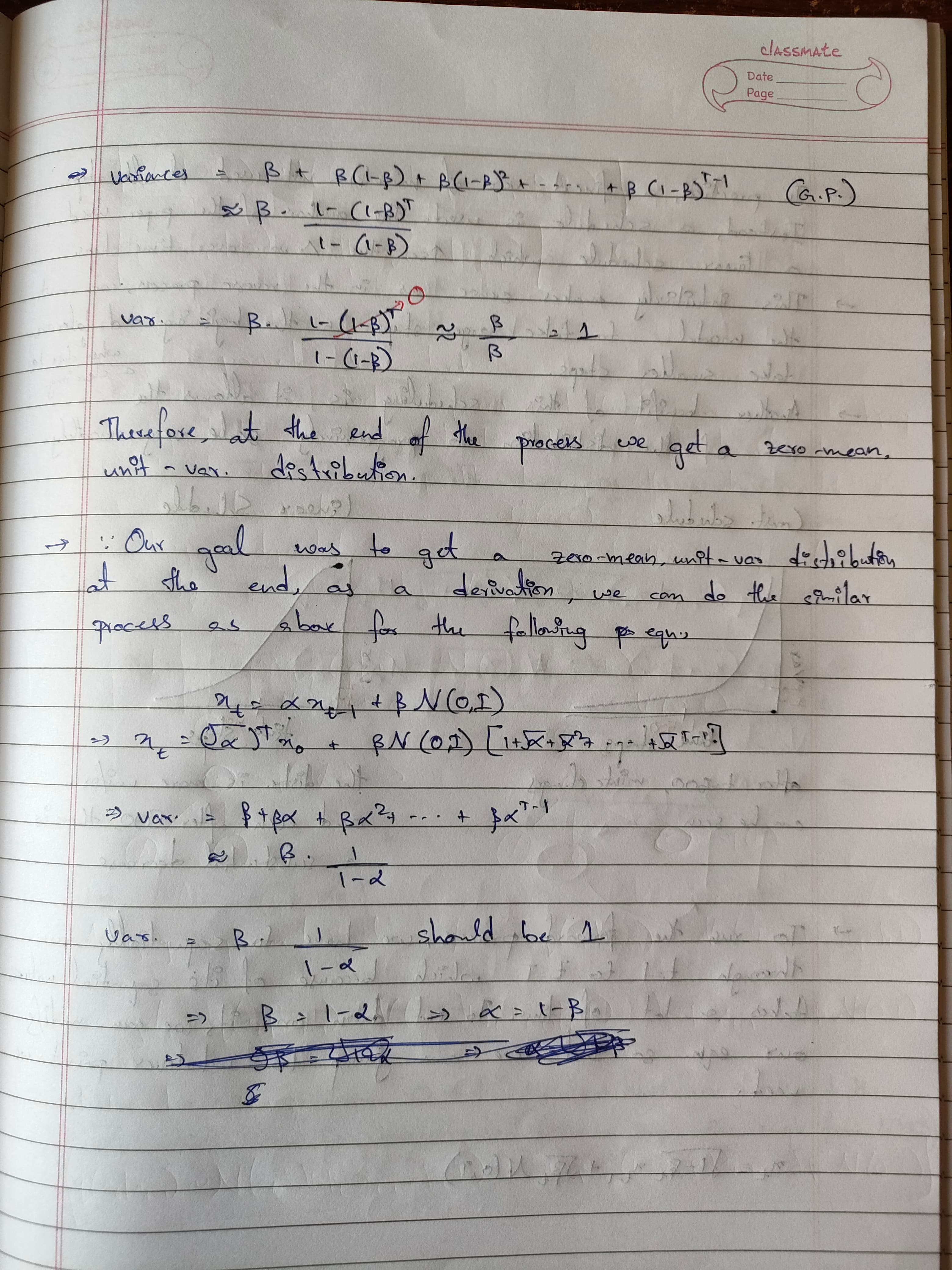

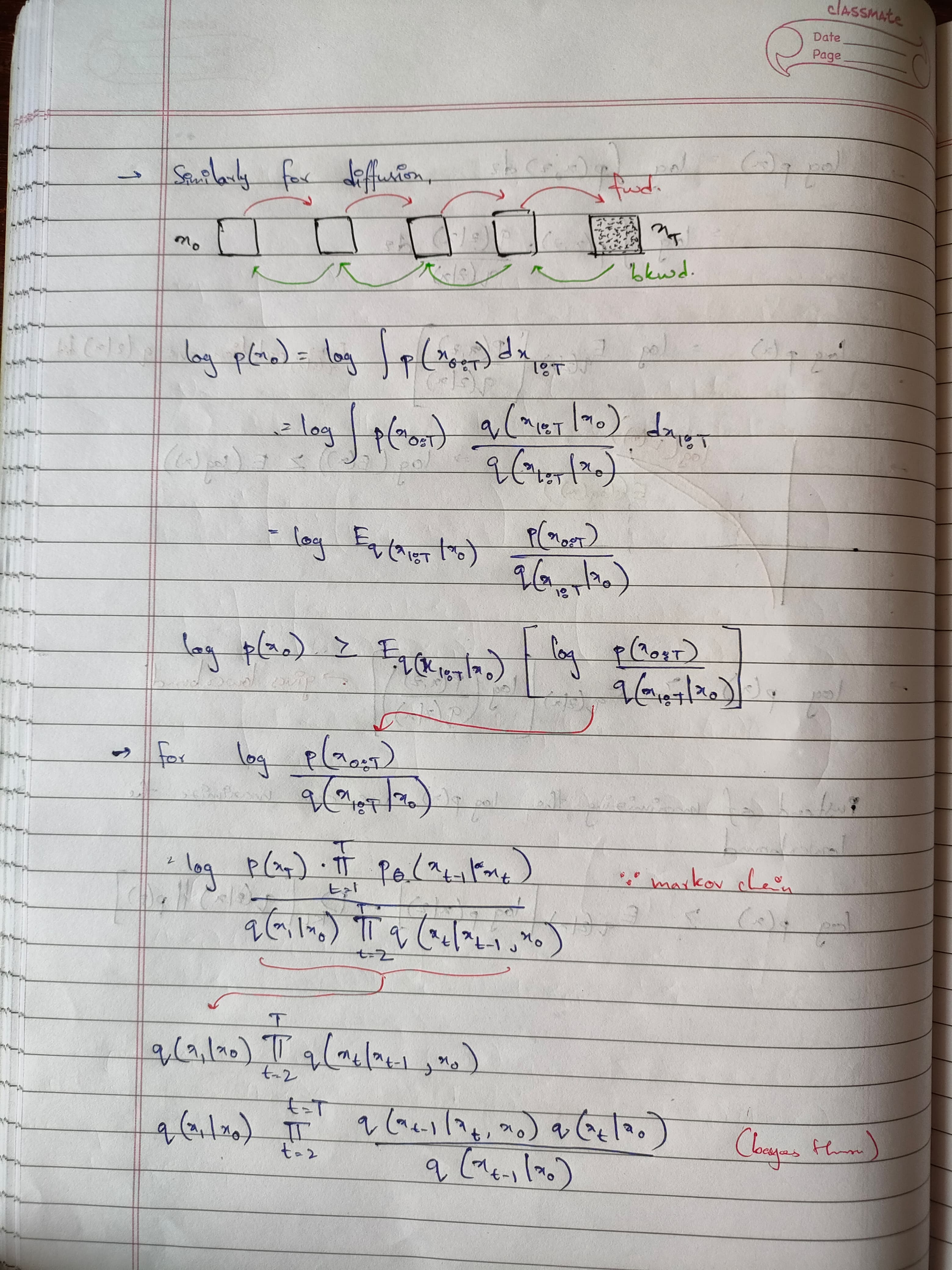

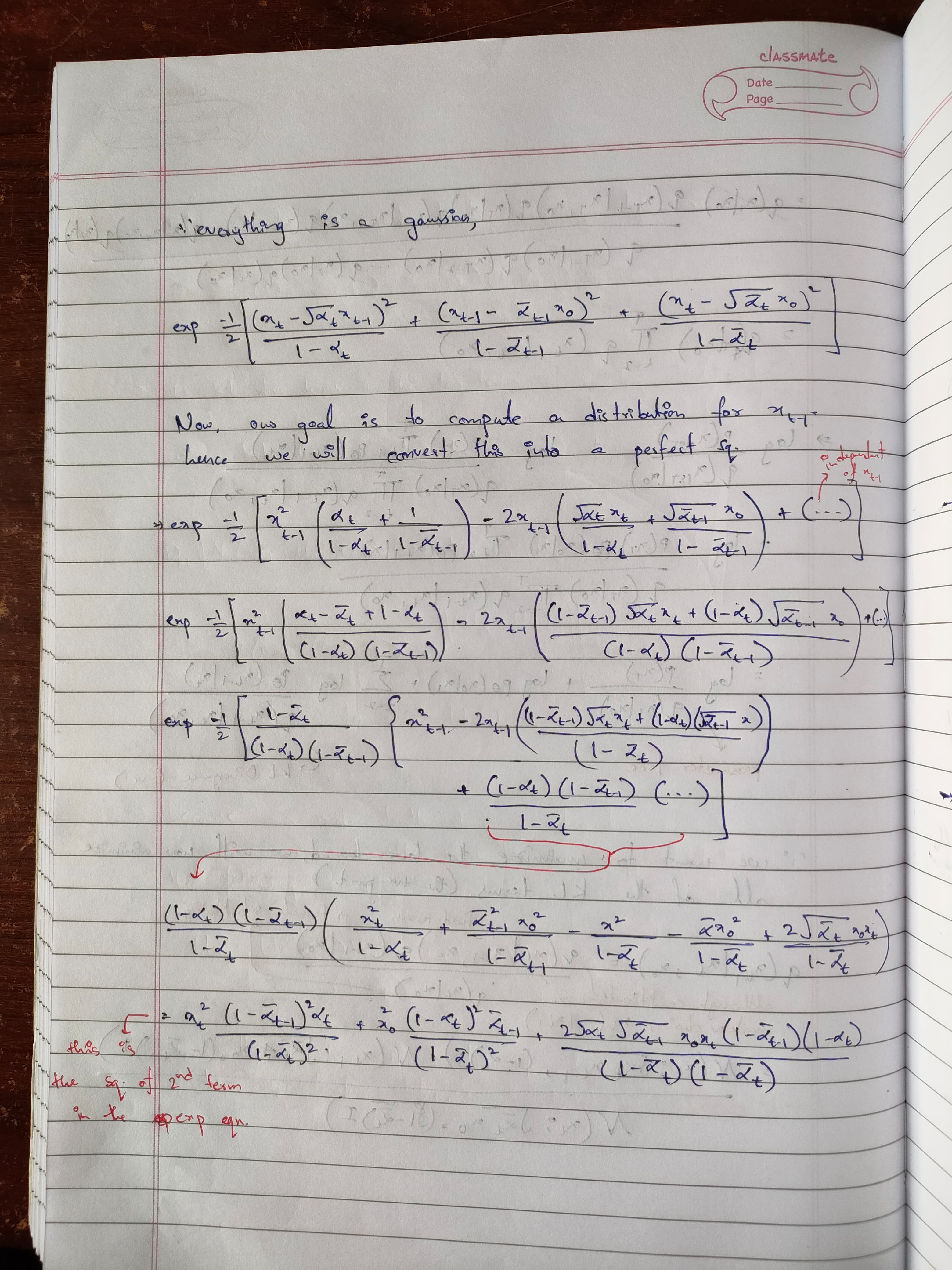

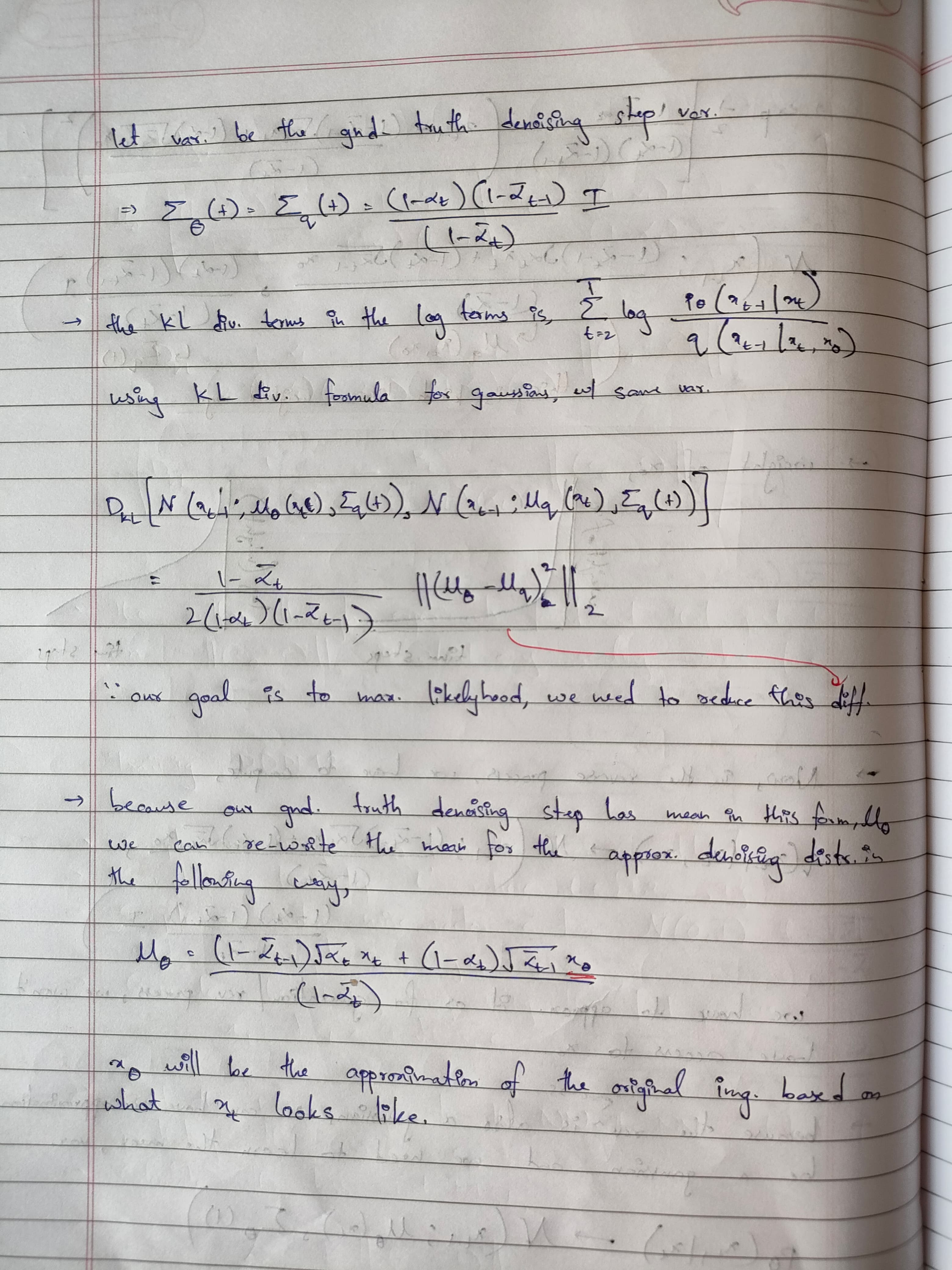

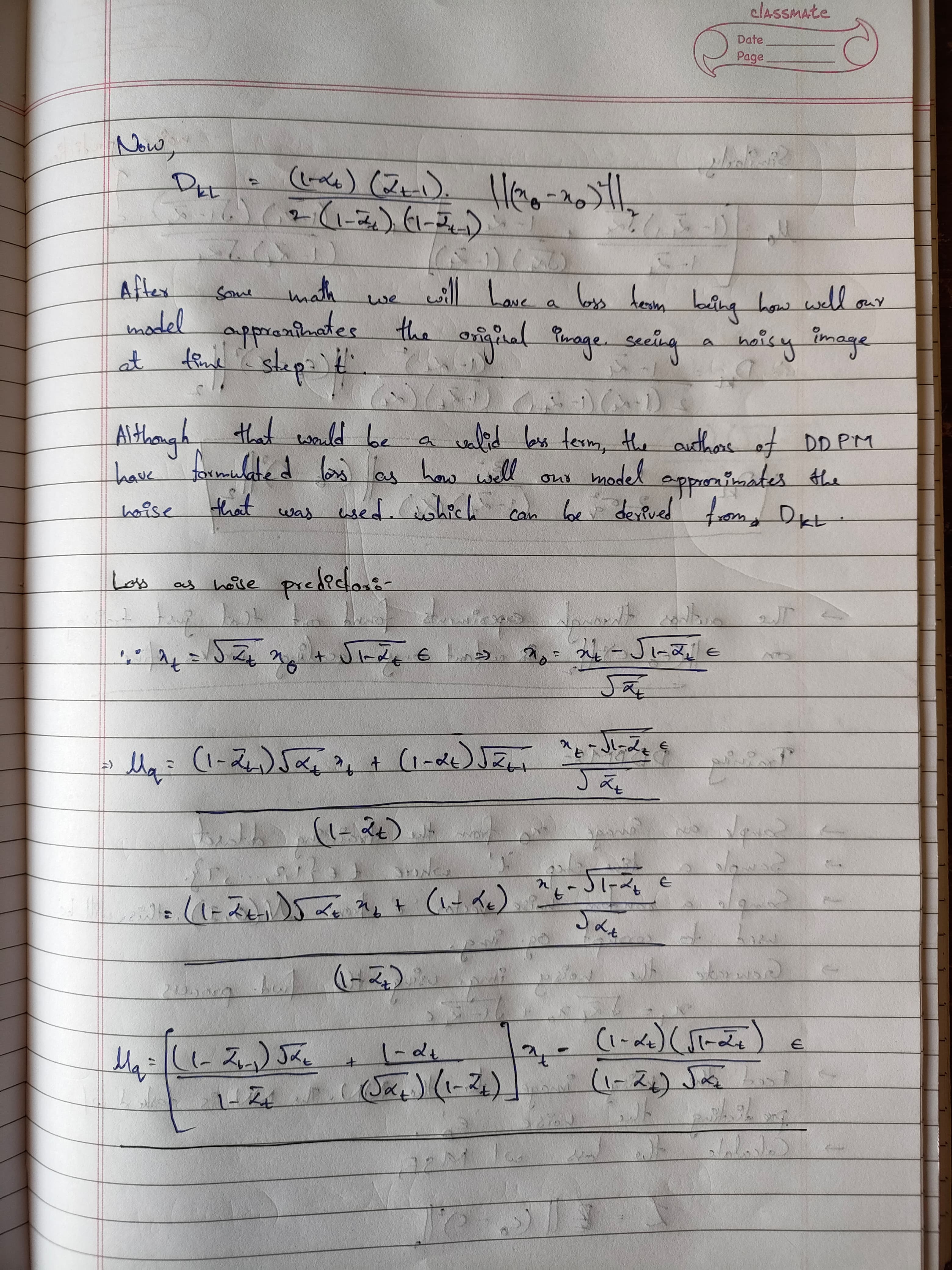

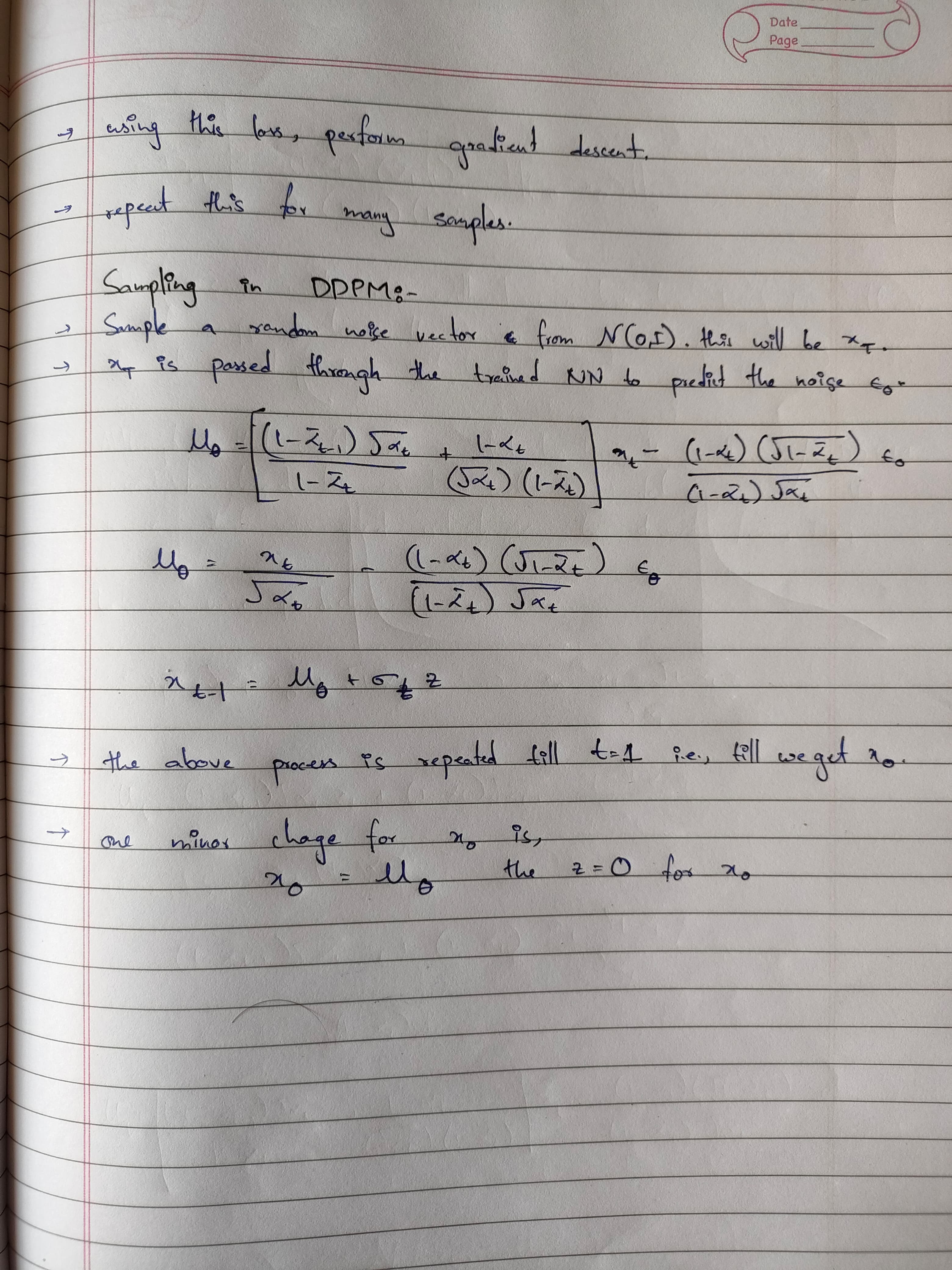

Handwritten Notes on Detailed Math